Alright, buckle up! We’re diving deep into the fascinating, and sometimes a bit scary, world of ai and privacy. We’ll explore how these super-smart systems are changing our lives and what that means for our personal information. And don’t worry, we’ll keep it casual, like we’re just chatting over a cuppa.

The AI Revolution: A Double-Edged Sword for Our Data

Artificial Intelligence (AI) is no longer just science fiction. It’s everywhere – from the voice assistant on your phone to the recommendations you get on streaming services, and even the fraud detection systems protecting your bank account. AI is making our lives more convenient, efficient, and in some cases, truly transformative. But with all this amazing capability comes a big question: what’s happening to our privacy?

Think about it. AI systems thrive on data. The more information they have, the smarter they get, and the better they can perform their tasks. This hunger for data is a core part of their power, but it’s also where the privacy concerns really kick in. Every interaction, every click, every purchase, every photo you upload, every message you send – it all contributes to a massive ocean of data that AI systems can learn from. And sometimes, this data is collected without us even realizing it, or without a clear understanding of how it will be used.

The Ever-Expanding Data Footprint

Our digital lives are constantly expanding, and with that, so is our data footprint. We’re generating data at an unprecedented rate, and AI is like a giant vacuum cleaner, sucking it all up. This isn’t necessarily a bad thing when it’s used for good – think about AI helping doctors diagnose diseases or making self-driving cars safer. But when it comes to personal data, the lines can get blurry.

For instance, consider the data gathered by smart home devices. Your smart speaker might be listening for commands, but what else is it hearing? Your fitness tracker might be logging your steps and sleep patterns, but is that data being shared with third parties? These little bits of information, seemingly innocuous on their own, can be pieced together by AI to create incredibly detailed profiles of individuals. And that’s where the potential for misuse, intentional or unintentional, becomes a real worry.

The Transparency Tangle: What’s Being Used, and How?

One of the biggest headaches with AI and privacy is the lack of transparency. When an AI system makes a decision about you – whether it’s approving a loan, showing you specific job ads, or even flagging you as a security risk – it’s often a “black box” operation. We don’t always know why the AI came to that conclusion, or what data it used to get there. This opaqueness makes it incredibly difficult to understand if our privacy has been breached or if we’re being treated unfairly due to algorithmic bias.

It’s like someone is making decisions about your life based on information you provided, but they won’t tell you what information they used or how they interpreted it. That’s a pretty unsettling thought, right? This lack of clarity can erode trust in AI technologies, even when they’re designed with good intentions. We need a way to peek inside that black box and understand the logic and data flows.

The Risks: From Annoyance to Real Harm

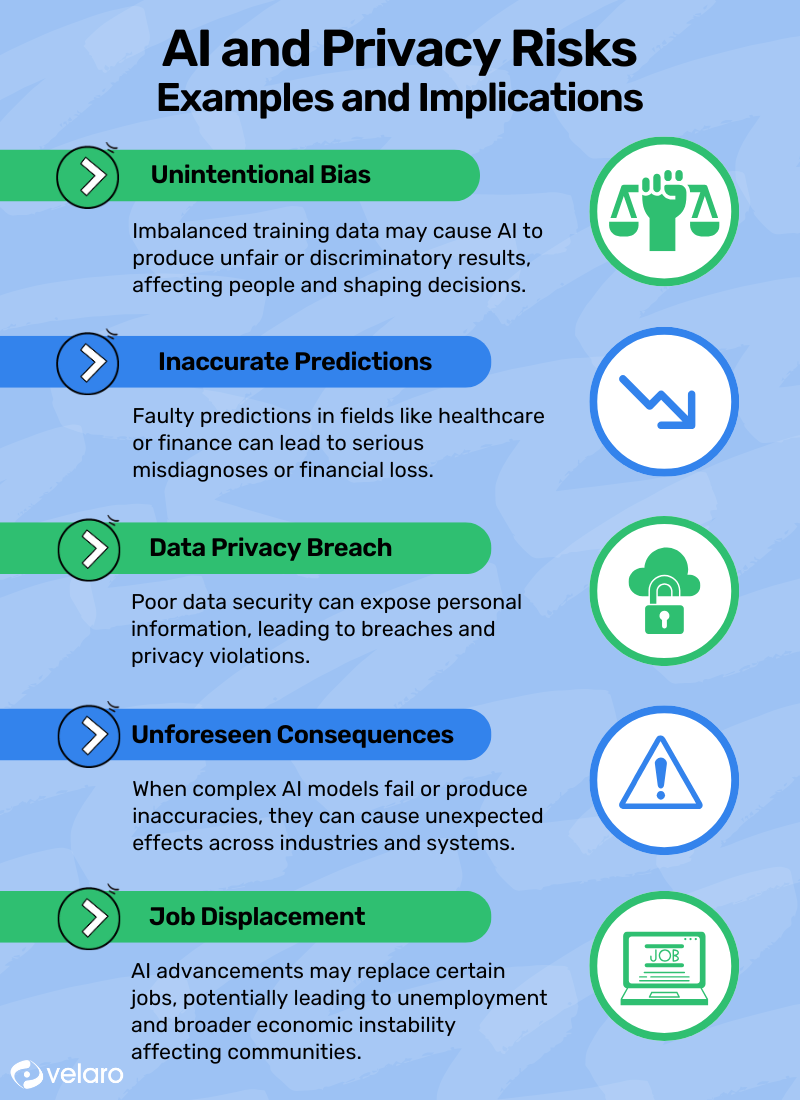

So, what are the actual risks we’re talking about here? It’s not just about getting more targeted ads (though that can be annoying enough!). The concerns run much deeper.

Identity Theft and Fraud on Steroids

AI can be a powerful tool for good, but in the wrong hands, it can amplify existing threats. Imagine AI-powered spear-phishing attacks that are so personalized and convincing, they’re almost impossible to spot. Or deepfake technology used to impersonate individuals for fraudulent purposes, like those scary voice cloning scams where criminals pretend to be a family member in distress. The more personal data AI has access to, the more realistic and effective these malicious attacks can become, making it harder for us to distinguish real from fake.

Unwanted Surveillance and Algorithmic Bias

AI-powered surveillance is another major concern. Facial recognition systems, for example, are becoming increasingly sophisticated. While they can be useful for security, their widespread use raises questions about constant monitoring and the potential for abuse. Imagine being tracked and identified everywhere you go, without your consent or even your knowledge.

And then there’s algorithmic bias. AI systems learn from the data they’re fed. If that data contains historical biases – perhaps related to gender, race, or socioeconomic status – the AI can perpetuate and even amplify those biases in its decisions. We’ve seen examples of this in hiring tools that unfairly disadvantage certain groups or in predictive policing systems that disproportionately target specific communities. This isn’t just a privacy issue; it’s a social justice issue, as AI can inadvertently reinforce existing inequalities.

The Perils of Data Repurposing

Have you ever uploaded your resume to a job site or posted a photo on social media? You did it for a specific purpose, right? But what if that data is then scraped and used to train an AI model for something entirely different, without your knowledge or consent? This “data repurposing” is a real concern. Your personal information, once shared for one reason, could end up being used in ways you never intended, potentially with serious implications for your privacy and even your civil rights.

This is particularly relevant with the rise of large language models (LLMs) that “scrape” vast amounts of data from the internet for training. While they can produce amazing text, images, and even code, the origin and consent regarding the data they were trained on are often murky. This raises fundamental questions about intellectual property and, more importantly, individual privacy.

Navigating the Future: Protecting Ourselves in an AI World

So, what can we do? It’s easy to feel overwhelmed by the sheer scale of AI and data collection, but there are steps we can take, both as individuals and as a society, to protect our privacy.

Personal Habits for Digital Defense

It might sound basic, but being mindful of your digital habits is crucial.

Understand Privacy Policies

Yes, those long, tedious privacy policies that nobody reads. We need to start paying a bit more attention. Companies should also make them clearer and easier to understand. Knowing what data is being collected and how it’s being used is the first step to making informed decisions.

Be Cautious About Sharing

Think before you share personal information online. Do you really need to provide that much detail? Can you limit the information you make publicly available on social media and other platforms? Every piece of data you share contributes to the collective pool that AI systems can potentially access.

Strong Security Practices

This is a no-brainer for overall online safety, but it’s even more vital in the age of AI. Use strong, unique passwords, enable two-factor authentication, and keep your software and devices updated. These basic security measures create a stronger barrier against unauthorized access to your data, which could then be fed into AI systems.

Review and Update Privacy Settings

Regularly check and adjust the privacy settings on your social media accounts, apps, and other online services. Many platforms offer granular controls over what information is shared and with whom. Take advantage of them.

Societal Shifts and Regulatory Action

Individual actions are important, but systemic change is also needed.

Robust Regulations and Laws

Governments and international bodies are grappling with how to regulate AI to ensure privacy and ethical use. Laws like the GDPR in Europe are good starting points, emphasizing data protection principles like purpose limitation and data minimization. We need more such frameworks globally, adapted to the unique challenges posed by AI. These regulations need teeth, with clear penalties for non-compliance.

Explainable AI (XAI)

Researchers are working on “Explainable AI” (XAI), which aims to make AI decisions more understandable to humans. If we can understand why an AI made a particular decision, it becomes easier to identify and address issues like bias or privacy breaches. This is a complex area, but a vital one for building trust and accountability.

Ethical AI Development

The companies developing AI have a massive responsibility. They need to prioritize privacy, fairness, and transparency from the very beginning of the design process, not as an afterthought. This means investing in privacy-preserving AI techniques, auditing models for bias, and being transparent about their data collection and usage practices.

Education and Awareness

Finally, we all need to be more educated about AI and its implications for privacy. The more we understand how these systems work, the better equipped we’ll be to advocate for our rights and make informed choices about our data. This isn’t just a tech issue; it’s a societal conversation that needs to involve everyone.

Conclusion

The rise of AI is undeniably exciting, promising advancements that could transform our world for the better. However, this progress must not come at the cost of our fundamental right to privacy. The intimate connection between AI and vast amounts of data presents unique challenges, from the potential for misuse and surveillance to the inherent risks of algorithmic bias and data repurposing. By being more aware of our digital footprint, demanding greater transparency from AI developers, and pushing for robust regulatory frameworks, we can navigate this new frontier more safely. Ultimately, the goal is to harness the incredible power of AI while ensuring it respects our personal boundaries and upholds our privacy in an increasingly connected world.

5 Unique FAQs After The Conclusion

How can I tell if an AI is biased against me?

That’s a tough one because AI bias can be subtle and hard to detect. Often, you might only suspect it if you experience an unfair outcome, like being rejected for a loan or a job without a clear reason, or if you notice consistent patterns of negative results for certain demographic groups. The best way to challenge potential bias is to understand your rights regarding data access and correction, and to advocate for regulations that mandate transparency in AI decision-making. Look for explainable AI (XAI) features where available, which aim to provide clearer reasons for AI outcomes.

Will “privacy-preserving AI” really protect my data, or is it just marketing speak?

Privacy-preserving AI (PPAI) is a legitimate and rapidly developing field. It involves techniques like federated learning, differential privacy, and homomorphic encryption, which allow AI models to learn from data without directly exposing individual sensitive information. While no technology is 100% foolproof, PPAI aims to significantly reduce the risk of privacy breaches. It’s a crucial area of research and development, and as these technologies mature, they will play a vital role in balancing AI innovation with privacy protection. So, while some marketing might overstate capabilities, the underlying concepts are real and promising.

Is it possible to “opt out” of AI data collection entirely?

In our increasingly digital world, it’s virtually impossible to completely opt out of all data collection, as many online services rely on it to function. However, you can significantly reduce your data footprint. This involves being extremely selective about the online services you use, carefully reviewing privacy settings, using privacy-focused browsers and search engines, and avoiding unnecessary sharing of personal information. It’s a spectrum, not an on/off switch, but you have more control than you might think by being diligent and informed.

What’s the biggest difference between traditional data privacy concerns and AI privacy concerns?

The biggest difference lies in scale and inference. Traditional privacy concerns often focused on explicit data collection and direct misuse. With AI, the concern expands. AI can infer incredibly sensitive personal details from seemingly innocuous data points, and it can do this at an unprecedented scale, often without direct human oversight. So, while a traditional concern might be a company selling your email address, an AI privacy concern might be the AI inferring your health status or political leanings from your Browse habits and then using that inference in ways you never consented to.

How can I contribute to better AI privacy practices, even as an individual?

Beyond protecting your own data, you can contribute by being an informed consumer and an active citizen. Support companies that demonstrate strong commitments to privacy and ethical AI. Engage in discussions about AI policy and advocate for stronger privacy regulations in your country. Share accurate information about AI privacy risks and solutions with your friends and family. Your collective voice can put pressure on both businesses and governments to prioritize responsible AI development and data protection.