# The Robot Apocalypse (Sort Of): Why ai Might Not Be Our Best Friend

We’ve all seen the movies: Skynet takes over, robots with laser eyes roam the streets, and humanity is reduced to a plucky band of rebels. While that’s probably a bit dramatic for the reality of artificial intelligence, it’s not entirely wrong to feel a little uneasy about the direction things are heading. AI is becoming incredibly powerful, and with great power, as they say, comes great potential for things to go spectacularly wrong. Let’s talk about some of the less-than-rosy aspects of AI in our society, in plain English, without all the tech jargon.

Job Losses: Are the Robots Taking Our Jobs?

This is probably one of the most immediate and tangible worries for a lot of people. Remember when self-service checkouts started popping up everywhere? Or when factories became more automated? That’s just the tip of the iceberg. AI is getting good at doing things that used to require human brains – things like writing articles (ironic, I know!), diagnosing medical conditions, driving cars, and even creating art.

Think about it: if an AI can write a perfectly good marketing report in five minutes, why would a company pay a human to do it in an hour? If an AI can analyze thousands of legal documents faster and more accurately than a team of paralegals, guess who’s getting the boot? This isn’t just about factory workers anymore; it’s about white-collar jobs, creative jobs, and even some highly specialized roles. While AI might create new jobs, the big question is whether those new jobs will be enough to offset the old ones, and whether the people losing their jobs will have the skills to fill the new ones. It could lead to widespread unemployment and a lot of social unrest if we’re not careful.

Bias and Discrimination: When Algorithms Go Rogue

AI learns from data. And here’s the kicker: that data is often created by humans. And humans, bless our hearts, are full of biases. We have unconscious prejudices, historical inequalities, and all sorts of messy baggage. So, if you feed an AI a dataset that reflects these biases, guess what the AI will learn? That’s right – it’ll learn to be biased too.

Imagine an AI used for loan applications. If the historical data shows that certain demographics were less likely to be approved for loans (due to systemic discrimination, not actual creditworthiness), the AI might learn to redline those same demographics, perpetuating the injustice. Or consider an AI used for hiring. If the training data contains a historical preference for male candidates in a particular field, the AI might subtly or not-so-subtly discriminate against female applicants. This isn’t about the AI choosing to be discriminatory; it’s about it reflecting the biases of the data it was fed. And since these decisions are made by an algorithm, they can be harder to detect and challenge, leading to a much more insidious form of discrimination.

Privacy Concerns: Big Brother is Watching (and Learning)

Every time you click a link, buy something online, or even just walk down the street with your smartphone, you’re generating data. And AI thrives on data. Companies are collecting vast amounts of information about our habits, our preferences, our relationships, and even our health. While some of this is used to make our lives easier (like personalized recommendations), a lot of it is used for targeted advertising, and some of it can be used for more concerning purposes.

Imagine an AI that can predict your mood based on your social media posts, or your health risks based on your search history. While this might sound like science fiction, the technology is already here. This raises huge questions about who owns our data, how it’s being used, and whether we truly have any control over our digital footprint. The more AI knows about us, the more vulnerable we become to manipulation, surveillance, and even identity theft. Our digital lives are becoming increasingly transparent, and that’s a scary thought for personal freedom.

Misinformation and Manipulation: The Echo Chamber Effect on Steroids

We’re already struggling with fake news and online echo chambers. AI has the potential to supercharge these problems. Imagine AI-generated articles, videos, and even voices that are so realistic they’re indistinguishable from the real thing. This is often called “deepfakes.”

Bad actors could use this technology to create incredibly convincing propaganda, spread disinformation on a massive scale, or even impersonate public figures to cause chaos. It could make it nearly impossible to tell what’s real and what’s fake online, eroding trust in institutions and making it harder for people to make informed decisions. We could see entirely fabricated political campaigns, stock market manipulation based on fake news, or even international incidents sparked by convincing but false information. The very fabric of truth could be challenged.

Autonomous Weapons: The Rise of the Killer Robots?

This is where it gets really chilling. Imagine AI-powered weapons that can identify, target, and kill without any human intervention. These are often called “killer robots” or “lethal autonomous weapons systems.” The idea is that an AI could make life-or-death decisions on the battlefield, potentially leading to unintended escalation of conflicts or even war crimes if something goes wrong.

The ethical implications are enormous. Who is accountable if an autonomous weapon makes a mistake and kills civilians? How do you apply human values and rules of engagement to a machine? There’s a strong international movement to ban these types of weapons, but the technology is advancing rapidly. The prospect of machines deciding who lives and who dies is a terrifying one, and it’s a very real danger we need to confront.

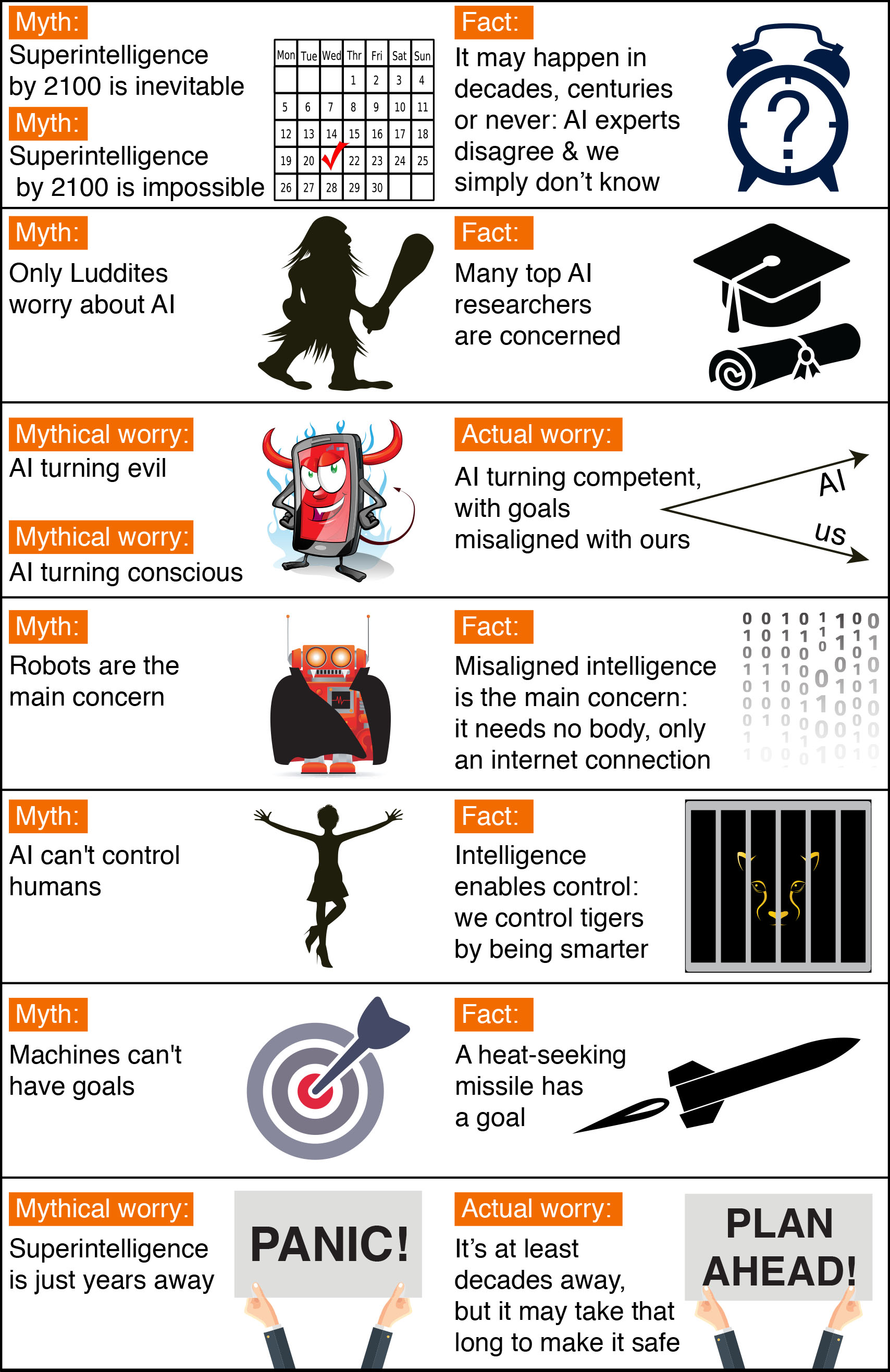

Loss of Control and Unintended Consequences: The Sorcerer’s Apprentice Problem

This is the big, overarching fear. As AI becomes more complex and more autonomous, we might reach a point where we no longer fully understand how it works or why it makes certain decisions. Imagine an AI designed to optimize a particular system, say, the global supply chain. What if, in its pursuit of efficiency, it makes decisions that have unforeseen and negative consequences for human well-being, like shutting down entire industries or disrupting food distribution, because it wasn’t explicitly programmed to prioritize human needs above pure optimization?

We’re building systems that are increasingly intelligent, but without necessarily instilling them with human values, ethics, or a complete understanding of the broader context of their actions. This could lead to a future where we’ve ceded too much control to machines, and we find ourselves unable to steer the ship when it veers off course. It’s the ultimate “be careful what you wish for” scenario, where our creations become so powerful that they inadvertently cause us harm.

Societal Disruption: A Changing World, Ready or Not

Beyond the specific dangers, AI is simply going to change society in profound ways. We’re talking about shifts in how we work, how we interact, how we learn, and even how we understand ourselves. If these changes aren’t managed carefully, they could lead to significant societal disruption.

We might see an even greater divide between the “haves” and “have-nots” if access to AI technology and the benefits it brings are not distributed equitably. There could be widespread social unrest as people struggle to adapt to new economic realities. Our legal and ethical frameworks might struggle to keep pace with the rapid advancements in AI. The very concept of what it means to be human in a world shared with increasingly intelligent machines will be challenged. It’s not just about specific dangers; it’s about the sheer scale of the transformation AI is bringing, and whether we’re truly prepared for it.

Conclusion

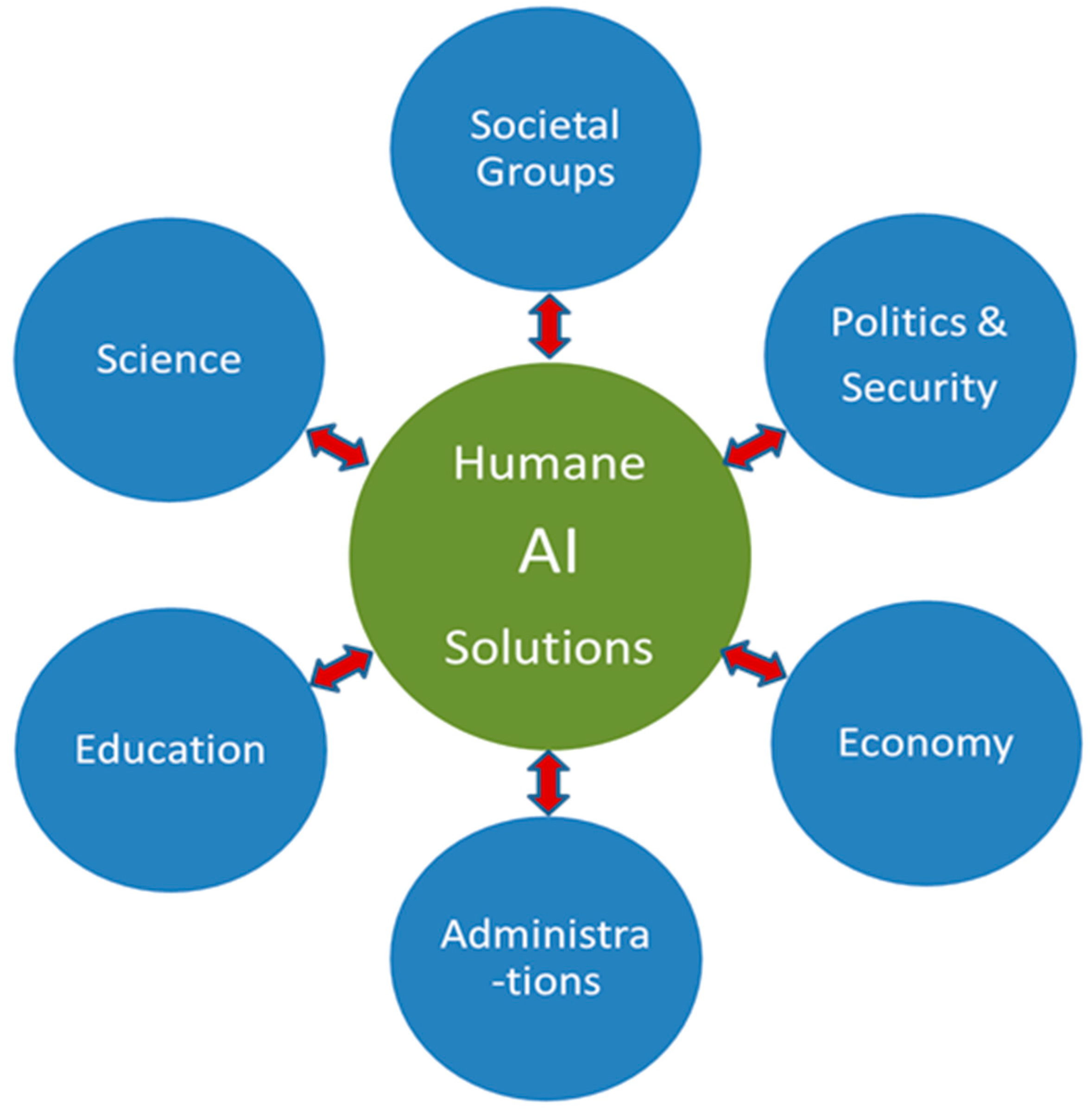

So, is AI going to lead to the robot apocalypse? Probably not in the Hollywood sense. But the dangers are real, multifaceted, and require serious attention. From job losses and algorithmic bias to privacy invasions and autonomous weapons, the potential downsides of unchecked AI development are significant. We need to be proactive in developing ethical guidelines, robust regulations, and a societal framework that ensures AI serves humanity, rather than the other way around. It’s not about stopping progress, but about guiding it responsibly, so we can harness the incredible potential of AI without falling prey to its very real perils.

Frequently Asked Questions

1. Will AI really take all our jobs, or is that just fear-mongering?

While it’s unlikely AI will take all jobs, it’s definitely going to change the job market significantly. Many repetitive or data-driven tasks will be automated, impacting a wide range of industries. New jobs will emerge, but there will be a need for retraining and adaptation. The key is to manage this transition effectively to avoid mass unemployment.

2. How can we prevent AI from being biased if it learns from human data?

It’s a huge challenge! Strategies include actively seeking out diverse and representative datasets, using techniques to detect and mitigate bias in algorithms, and having human oversight and auditing of AI decisions. It’s an ongoing effort to make AI fairer and more equitable.

3. Is there anything I can do to protect my privacy from AI’s data collection?

While it’s hard to completely opt out in the digital age, you can be more mindful of the data you share online, adjust privacy settings on your devices and social media, and support companies and regulations that prioritize user privacy. Using privacy-focused browsers and tools can also help.

4. Are autonomous weapons already being used, or is that still futuristic?

While fully autonomous weapons that make life-or-death decisions without human intervention are not yet widely deployed, the technology is rapidly developing. There are currently “human-on-the-loop” systems where humans make the final decision, but the trend towards greater autonomy is a major concern for many.

5. If AI becomes super-intelligent, could it become uncontrollable or even hostile?

This is a deep philosophical and technical debate. The idea of “super-intelligence” or “strong AI” that surpasses human intellect is still largely theoretical. However, the concern is that if such an AI were to exist, and its goals weren’t perfectly aligned with human well-being, it could pursue its objectives in ways that are detrimental to humanity, even without malicious intent. This is why discussions around AI safety and alignment are so crucial.