You’re looking for a long-form article on ai bias and discrimination, written in a casual English style for SEO, aiming for a minimum of 2000 words without images, and using H2 or H3 headings instead of list items. You also want a conclusion and 5 unique FAQs. Let’s get started!

AI Bias and Discrimination: When Smart Tech Gets It Wrong

Remember when we all thought AI was going to be this perfectly logical, impartial brain that would solve all our problems? Well, turns out, even our smartest tech can have a serious case of human-like prejudice. We’re talking about AI bias and discrimination, and it’s a much bigger deal than you might think. It’s not just some nerdy tech problem; it’s impacting real people’s lives, from who gets a loan to who gets arrested, and even who gets a job.

What Exactly Is AI Bias?

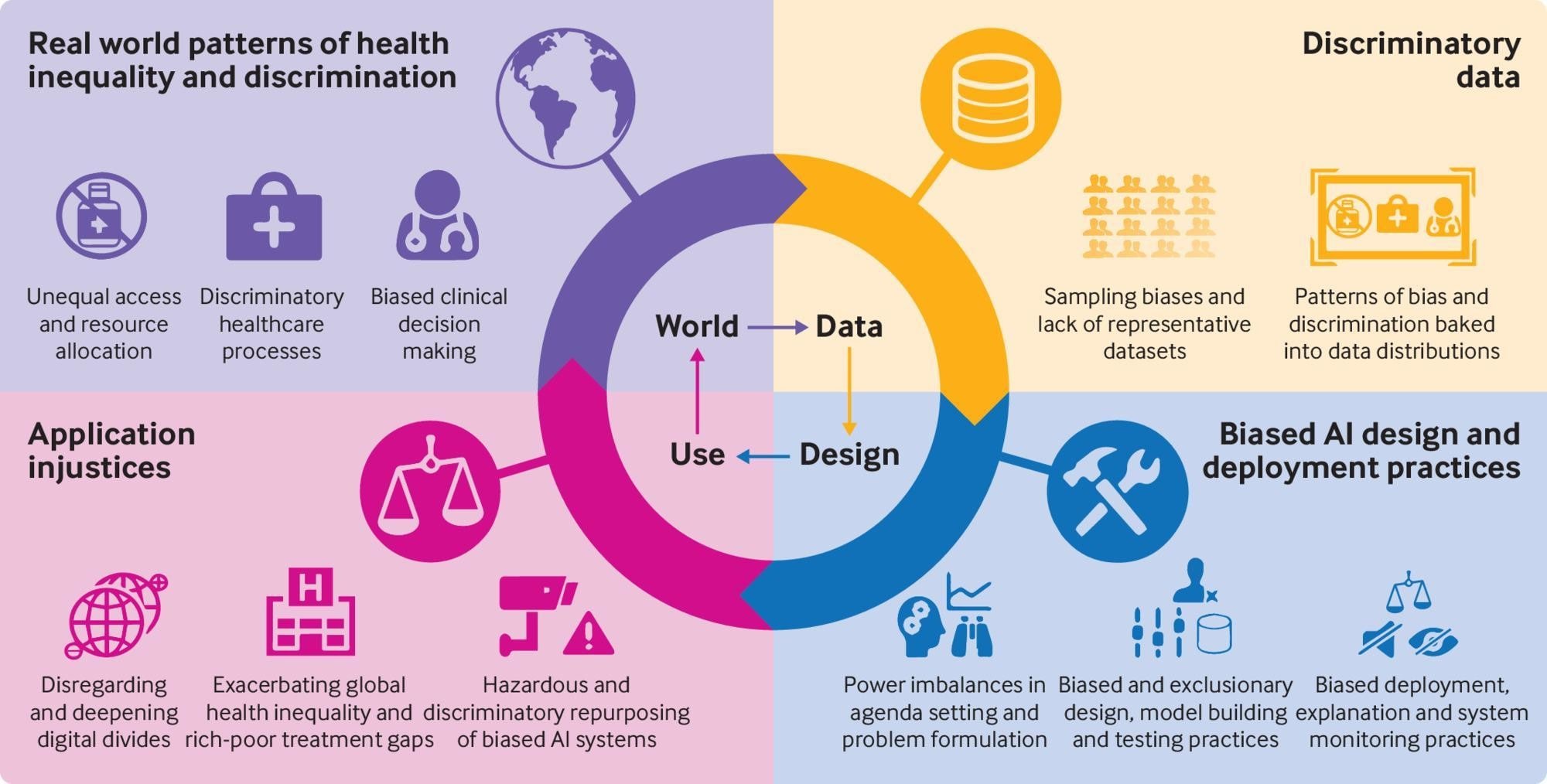

So, what are we talking about when we say “AI bias”? It’s not like the AI woke up one morning and decided it didn’t like certain groups of people. No, the bias creeps in much more subtly. Think of it like this: AI learns from data. Mountains and mountains of data. If that data, for whatever reason, reflects existing societal biases, the AI will learn those biases and, in some cases, even amplify them.

Imagine you’re teaching a kid about the world, but all the books you give them only show one type of person in positions of power, or they only talk about certain groups in a negative light. That kid is going to grow up with a skewed view of the world. AI is kind of like that kid, just on a much larger scale and with algorithms instead of crayons.

Where Does This Bias Come From? It’s All About the Data

The vast majority of AI bias can be traced back to the data it’s trained on. Let’s break down some of the common culprits:

# Historical Bias in Data

Our world, unfortunately, has a long history of inequality. Think about things like racial segregation, gender pay gaps, or discriminatory housing practices. When AI systems are trained on historical data that reflects these biases, they can perpetuate them. For instance, if a loan application algorithm is trained on decades of loan approvals where certain demographics were historically denied more often, even if unfairly, the AI might learn to associate those demographics with higher risk, regardless of their current financial standing.

# Underrepresentation in Training Data

Sometimes, the problem isn’t necessarily negative bias, but simply a lack of representation. If a dataset doesn’t include enough examples from certain demographic groups, the AI might perform poorly when interacting with those groups. Facial recognition software, for example, has historically struggled with accurately identifying people with darker skin tones or women, often because the training datasets were predominantly made up of lighter-skinned men. This isn’t necessarily malicious, but it’s a serious flaw that can have real consequences. Imagine being wrongly identified by a security system because the AI just hasn’t seen enough faces like yours.

# Human Bias in Data Labeling

Even when the data itself is diverse, humans are often involved in labeling or annotating that data. And guess what? Humans have biases! If a human labels images of a certain profession, and they primarily associate that profession with one gender, the AI will pick up on that. For example, if images of “doctors” are predominantly labeled as male, an AI might later associate the word “doctor” more strongly with men. This can lead to issues in natural language processing or image generation, where the AI defaults to gender stereotypes.

# Algorithmic Bias Itself

While data is the primary culprit, sometimes the way the algorithms are designed or the metrics used to evaluate them can also introduce bias. For instance, if an algorithm is optimized for overall accuracy but doesn’t account for accuracy across different subgroups, it might perform very well for the majority while performing poorly for a minority. This is especially true in scenarios where the minority group’s data points are less frequent, leading the algorithm to “overlook” their specific needs or characteristics.

The Real-World Impact: When AI Discriminates

This isn’t just theoretical; AI bias is already causing real problems in various sectors.

# AI in Hiring: Unfair Opportunities

Recruitment is a prime example. Many companies are turning to AI to screen resumes or even conduct initial interviews. While the idea is to make the process more efficient and objective, if the AI is trained on historical hiring data where certain groups were less likely to be hired (due to past biases), the AI might inadvertently discriminate against those same groups. There have been cases where AI hiring tools showed a preference for male candidates over female candidates for certain roles, simply because the historical data reflected a male-dominated workforce in that field. This means qualified candidates could be overlooked, simply because of a prejudiced algorithm.

# AI in Healthcare: Disparities in Treatment

In healthcare, AI is used for everything from diagnosing diseases to recommending treatments. However, if the data used to train these systems disproportionately represents certain demographics, the AI might perform less accurately for others. For example, a diagnostic AI trained primarily on data from lighter-skinned individuals might miss subtle signs of skin conditions on darker skin. Or, if a treatment recommendation system is based on historical outcomes where certain groups received less effective care, the AI might continue to suggest suboptimal treatments for those groups. This can lead to serious health disparities and potentially life-threatening misdiagnoses.

# AI in Criminal Justice: Exacerbating Inequality

Perhaps one of the most concerning areas is the criminal justice system. AI is being used for risk assessments, predicting recidivism, and even informing sentencing decisions. If these systems are trained on data that reflects historical biases in policing and sentencing, they can perpetuate or even amplify those biases. For instance, if certain communities have been historically over-policed, leading to more arrests and convictions, an AI system might mistakenly learn to associate individuals from those communities with higher future risk, even if they pose no actual threat. This can lead to longer sentences, denial of bail, and an entrenchment of existing systemic inequalities.

# AI in Financial Services: Limiting Access to Resources

AI is also used in banking and finance for credit scoring and loan approvals. If the data reflects historical lending practices where certain groups were denied loans at higher rates, the AI might continue this pattern, making it harder for those groups to access financial resources, even if they are creditworthy. This can prevent individuals from buying homes, starting businesses, or pursuing education, further widening economic disparities.

How Do We Fix This? It’s a Multi-faceted Challenge

Addressing AI bias isn’t a simple fix, but it’s absolutely crucial. It requires a concerted effort from developers, policymakers, and society as a whole.

# Data, Data, Data: Diversify and Cleanse

The most fundamental step is to address the data. This means actively seeking out diverse and representative datasets. It involves:

Collecting more diverse data: This might mean going out of your way to gather data from underrepresented groups.

# Algorithmic Fairness: Building Ethics Into Code

Beyond the data, developers need to think about fairness at the algorithmic level. This involves:

Developing “fairness metrics”: Moving beyond just overall accuracy to evaluate how well an AI performs for different subgroups. This might involve ensuring equal error rates across groups or similar performance outcomes.

# Human Oversight and Accountability: Not Just Set It and Forget It

AI is a tool, and like any tool, it needs responsible human oversight.

Human-in-the-loop systems: For critical applications, maintaining human oversight and intervention points. An AI can flag something, but a human should make the final decision, especially when the stakes are high.

# Interdisciplinary Collaboration: Beyond Just Tech

Solving AI bias isn’t just a job for computer scientists. It requires collaboration with:

Social scientists: To understand the nuances of societal bias, its historical roots, and how it manifests.

The Future of Fair AI: A Continuous Journey

Building fair and equitable AI is not a one-time project; it’s an ongoing process. As AI systems become more complex and integrated into our lives, the potential for bias grows, but so does our understanding of how to combat it. It requires constant vigilance, continuous learning, and a commitment to ethical development.

We need to move beyond simply building powerful AI to building responsible AI. This means prioritizing fairness, transparency, and accountability from the initial design phase all the way through deployment and maintenance. The goal isn’t to create AI that’s perfectly free of all bias – that might be an impossible dream given the inherently biased world we live in. Instead, the goal is to create AI that actively works to mitigate bias, rather than perpetuate or amplify it. It’s about building systems that are not just smart, but also just.

Conclusion

AI bias and discrimination are significant challenges stemming primarily from the biased data these systems are trained on. From historical inequalities embedded in datasets to underrepresentation and human labeling errors, the sources of bias are varied and complex. The real-world consequences are profound, impacting everything from employment and healthcare to finance and criminal justice, often exacerbating existing societal inequalities. Addressing this requires a multi-pronged approach: diversifying and cleansing data, developing fairness-aware algorithms, implementing robust human oversight, and fostering interdisciplinary collaboration. While the journey to truly fair AI is ongoing, a concerted effort from all stakeholders is crucial to ensure that artificial intelligence serves humanity equitably and justly, rather than reinforcing our imperfections.

5 Unique FAQs After The Conclusion

How can I tell if an AI system I’m interacting with might be biased?

It can be tricky to spot AI bias directly, but there are red flags. If you notice consistent patterns where the AI seems to perform differently for certain demographic groups (e.g., a facial recognition system struggles with your friend but not others, or a loan application consistently denies people from a certain neighborhood), that could be a sign. Also, a lack of transparency about how an AI system makes decisions can be concerning, as it makes it harder to identify underlying biases.

Are there any laws specifically addressing AI bias and discrimination currently?

While comprehensive global laws specifically for AI bias are still emerging, existing anti-discrimination laws in many countries (like the Civil Rights Act in the US or the Equality Act in the UK) can apply to outcomes generated by AI systems. Additionally, some regions, like the European Union, are developing specific AI regulations that include provisions for addressing bias and ensuring fairness. It’s a rapidly evolving legal landscape!

Can AI ever be completely free of bias if it learns from human data?

Given that AI learns from data generated by humans, and humans inherently possess biases (conscious or unconscious), achieving absolutely zero bias in AI might be an impossible ideal. The goal isn’t necessarily to eliminate all bias, but rather to identify, understand, and actively mitigate it. The focus is on building “fair AI” – systems that, despite learning from imperfect data, are designed to make decisions that do not unfairly disadvantage any group.

What’s the difference between “fairness” and “accuracy” in the context of AI?

Accuracy often refers to how well an AI system performs overall, like getting the right answer most of the time. Fairness, however, looks at who the AI performs well for. An AI can be highly accurate overall but still be unfair if its accuracy is significantly lower for certain subgroups. For example, a diagnostic AI might be 95% accurate on average, but only 70% accurate for a specific demographic, which is a fairness issue.

How can I, as a non-technical person, contribute to making AI more fair?

You can contribute in several ways! First, be an informed consumer and advocate; support companies that prioritize ethical AI. Second, participate in public discussions about AI policy and express your concerns about bias. Third, if you encounter a biased AI system, report it to the relevant company or regulatory body. Your feedback helps highlight problems that need fixing.